...

- For a quick and easy testing, you may use to use WiscNet's HTML based browser test:.

...

| Flag | Details | Example |

|---|

| -c | Client mode | -c |

| -t | Time to run the test in seconds | -t 30 |

| -P | Number of parallel connections | -P 2 |

| -u | UDP (default is TCP) | -u |

| -b | Bandwidth per thread | -b 250m |

| -i | Interval between bandwidth reports in seconds | -i 1 |

-L | Listen on port | -L 5001 |

-r | bidirectional test (individually) | -r |

-d | bidirectional test (simultaneously) | -d |

...

TCP vs UDP testing

Iperf uses TCP by default. TCP has built in congestion avoidance. If TCP detects any packet loss, it assumes that the link capacity has been reached, and it slows down. This works very well, unless there is packet loss caused by something other than congestion. If there is packet loss due to errors, TCP will back off even if there is plenty of capacity. iperf allows TCP to send as fast as it can, which generally works to fill a clean, low latency link with packets. If a path is not clean/error free or has high latency, TCP will have a difficult time filling it. For testing higher capacity links and for links with higher latency, you will want to adjust the window size (-w option). See the KB article on TCP performance for more background.

By using the -u option, you have told iperf to use UDP packets, rather than TCP. UDP has no built in congestion avoidance, and iperf doesn't implement it either. When doing a UDP test, iperf requires that the bandwidth of the test be specified. If it isn't, it defaults to 1Mb/s. You can use the -b option to specify bandwidth to test. iperf will then send packets at the request rate for the requested period of time. The other end measures how many packets are received vs how many were sent and reports its results.

Examples

Unidirectional 1Gbps circuit test

Command

| Code Block |

|---|

|

iperf -c iperf.wiscnet.net -t 10 -P 4 -u -b 250m -i1 |

Results

When runing multiple threads you must look at the SUM lines for total throughput. Output below shows successfully getting 953Mbits/sec through

| Expand |

|---|

| title | Click here to expand results... |

|---|

|

| No Format |

|---|

$ iperf -c iperf.wiscnet.net -t 10 -P 4 -u -b 250m -i1

|

|

Unidirectional 1Gbps circuit test

Command

| Code Block |

|---|

|

iperf -c iperf.wiscnet.net -t 10 -P 4 -u -b 250m -i1 |

Results

When runing multiple threads you must look at the SUM lines for total throughput. Output below shows successfully getting 953Mbits/sec through

| Expand |

|---|

| title | Click here to expand results... |

|---|

|

| No Format |

|---|

$ iperf -c iperf.wiscnet.net -t 10 -P 4 -u -b 250m -i1

------------------------------------------------------------

Client connecting to iperf.wiscnet.net, UDP port 5001

Sending 1470 byte datagrams

UDP buffer size: 208 KByte (default)

------------------------------------------------------------

[ 5] local 10.0.10.105 port 44098 connected with 205.213.14.56---------------------

Client connecting to iperf.wiscnet.net, UDP port 5001

[Sending 1470 3] local 10.0.10.105 port 46090 connected with 205.213.14.56 port 5001

[ 4] local 10.0.10.105 port 38200 connected with 205.byte datagrams

UDP buffer size: 208 KByte (default)

------------------------------------------------------------

[ 5] local 10.0.10.105 port 44098 connected with 205.213.14.56 port 5001

[ 63] local 10.0.10.105 port 5929646090 connected with 205.213.14.56 port 5001

[ ID4] Interval Transfer Bandwidthlocal 10.0.10.105 port 38200 connected with 205.213.14.56 port 5001

[ 56] local 010.0- 1.0 .10.105 port 59296 connected with 205.213.14.56 port 5001

[ ID] Interval Transfer Bandwidth

[ 5] 0.0- 1.0 sec 28.4 MBytes 238 Mbits/sec

[ 3] 0.0- 1.0 sec 28.5 MBytes 239 Mbits/sec

[ 4] 0.0- 1.0 sec 28.6 MBytes 240 Mbits/sec

[ 6] 0.0- 1.0 sec 28.6 MBytes 240 Mbits/sec

[SUM] 0.0- 1.0 sec 114 MBytes 957 Mbits/sec

[ 5] 1.0- 2.0 sec 28.5 MBytes 239 Mbits/sec

[ 3] 1.0- 2.0 sec 28.4 MBytes 238 Mbits/sec

[ 4] 1.0- 2.0 sec 28.5 MBytes 239 Mbits/sec

[ 6] 1.0- 2.0 sec 28.2 MBytes 236 Mbits/sec

[SUM] 1.0- 2.0 sec 114 MBytes 953 Mbits/sec

[ 5] 2.0- 3.0 sec 28.4 MBytes 238 Mbits/sec

[ 3] 2.0- 3.0 sec 28.2 MBytes 237 Mbits/sec

[ 4] 2.0- 3.0 sec 28.6 MBytes 240 Mbits/sec

[ 6] 2.0- 3.0 sec 28.5 MBytes 239 Mbits/sec

[SUM] 2.0- 3.0 sec 114 MBytes 953 Mbits/sec

[ 5] 3.0- 4.0 sec 28.3 MBytes 238 Mbits/sec

[ 3] 3.0- 4.0 sec 28.3 MBytes 238 Mbits/sec

[ 4] 3.0- 4.0 sec 28.5 MBytes 239 Mbits/sec

[ 6] 3.0- 4.0 sec 28.4 MBytes 239 Mbits/sec

[SUM] 3.0- 4.0 sec 114 MBytes 952 Mbits/sec

[ 5] 4.0- 5.0 sec 28.3 MBytes 237 Mbits/sec

[ 3] 4.0- 5.0 sec 28.3 MBytes 238 Mbits/sec

[ 4] 4.0- 5.0 sec 28.3 MBytes 238 Mbits/sec

[ 6] 4.0- 5.0 sec 28.7 MBytes 241 Mbits/sec

[SUM] 4.0- 5.0 sec 114 MBytes 954 Mbits/sec

[ 5] 5.0- 6.0 sec 28.6 MBytes 240 Mbits/sec

[ 3] 5.0- 6.0 sec 28.4 MBytes 238 Mbits/sec

[ 4] 5.0- 6.0 sec 28.3 MBytes 238 Mbits/sec

[ 6] 5.0- 6.0 sec 28.5 MBytes 239 Mbits/sec

[SUM] 5.0- 6.0 sec 114 MBytes 955 Mbits/sec

[ 5] 6.0- 7.0 sec 28.5 MBytes 239 Mbits/sec

[ 3] 6.0- 7.0 sec 28.3 MBytes 238 Mbits/sec

[ 4] 6.0- 7.0 sec 28.5 MBytes 239 Mbits/sec

[ 6] 6.0- 7.0 sec 28.3 MBytes 237 Mbits/sec

[SUM] 6.0- 7.0 sec 114 MBytes 953 Mbits/sec

[ 5] 7.0- 8.0 sec 28.4 MBytes 238 Mbits/sec

[ 3] 7.0- 8.0 sec 28.4 MBytes 238 Mbits/sec

[ 4] 7.0- 8.0 sec 28.3 MBytes 238 Mbits/sec

[ 6] 7.0- 8.0 sec 28.5 MBytes 239 Mbits/sec

[SUM] 7.0- 8.0 sec 114 MBytes 953 Mbits/sec

[ 5] 8.0- 9.0 sec 28.5 MBytes 239 Mbits/sec

[ 3] 8.0- 9.0 sec 28.3 MBytes 237 Mbits/sec

[ 4] 8.0- 9.0 sec 28.4 MBytes 238 Mbits/sec

[ 6] 8.0- 9.0 sec 28.4 MBytes 238 Mbits/sec

[SUM] 8.0- 9.0 sec 114 MBytes 953 Mbits/sec

read failed: Connection refused

[ 3] WARNING: did not receive ack of last datagram after 1 tries.

[ 5] 9.0-10.0 sec 28.5 MBytes 239 Mbits/sec

[ 5] 0.0-10.0 sec 284 MBytes 239 Mbits/sec

[ 5] Sent 202875 datagrams

[ 3] 0.0-10.0 sec 284 MBytes 238 Mbits/sec

[ 3] Sent 202276 datagrams

[ 4] 0.0-10.0 sec 284 MBytes 239 Mbits/sec

[ 4] Sent 202852 datagrams

[ 6] 0.0-10.0 sec 285 MBytes 239 Mbits/sec

[ 6] Sent 203078 datagrams

[SUM] 0.0-10.0 sec 1.11 GBytes 954 Mbits/sec

read failed: Connection refused

[ 5] WARNING: did not receive ack of last datagram after 5 tries.

read failed: Connection refused

[ 6] WARNING: did not receive ack of last datagram after 9 tries.

[ 4] WARNING: did not receive ack of last datagram after 10 tries. |

|

...

| Note |

|---|

Check your firewall settings and NAT to ensure port 5001 is open to your host |

| Code Block |

|---|

|

iperf -c iperf.wiscnet.net -t 10 -P 4 -u -b 250m -i1 -r |

TCP vs UDP testing

Iperf uses TCP by default. TCP has built in congestion avoidance. If TCP detects any packet loss, it assumes that the link capacity has been reached, and it slows down. This works very well, unless there is packet loss caused by something other than congestion. If there is packet loss due to errors, TCP will back off even if there is plenty of capacity. iperf allows TCP to send as fast as it can, which generally works to fill a clean, low latency link with packets. If a path is not clean/error free or has high latency, TCP will have a difficult time filling it. For testing higher capacity links and for links with higher latency, you will want to adjust the window size (-w option). See the KB article on TCP performance for more background.

...

not receive ack of last datagram after 9 tries.

[ 4] WARNING: did not receive ack of last datagram after 10 tries. |

|

Bidirectional 1Gbps circuit test

| Note |

|---|

Check your firewall settings and NAT to ensure port 5001 is open to your host |

| Code Block |

|---|

|

iperf -c iperf.wiscnet.net -t 10 -P 4 -u -b 250m -i1 -r |

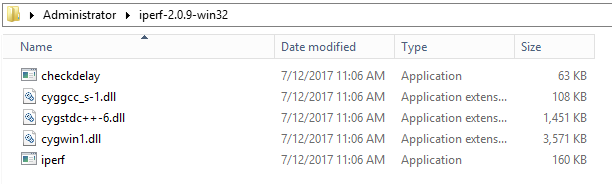

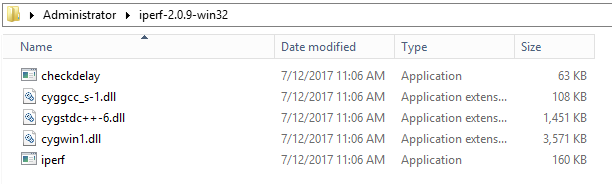

Installation Guides

Microsoft Windows

...